There’s a popular myth that keeps popping up that I wanted to post about.

Why is it so popular?

Well, because it seems intuitive if you aren’t working with SharePoint on a regular basis. If you are then I’m sure you don’t think this… and if you did, well shortly you’ll know the truth.

So here’s the myth

“We don’t need to split our content across separate content databases because if we need more than 200GB support for each database we will [1] move subsites around to different site collections in different databases or [2] use remote blob storage and put it all on file shares… then we’ll have a very small content database size.”

Why is this a myth?

Let’s address the second part of the statement first – “[2] use remote blob storage and put it all on file shares… then we’ll have a very small content database size”. This is a myth because the content database will still not be supported by Microsoft. The reason for this is that both the actual database size itself PLUS the content offloaded and stored on file shares count in the 200GB (or 4TB if you meet additional requirements). This means that even if you had a 1GB database and 225GB offloaded onto fileshares for this content database, then you’re actually at 226GB and therefore not supported if you do not meet these requirements. If you do meet the requirements and have a 1GB database with 4.5TB offloaded onto fileshares for a specific content database, now you’re at 5.5TB of content and again, not supported.

From http://technet.microsoft.com/en-us/library/cc262787.aspx: “If you are using Remote BLOB Storage (RBS), the total volume of remote BLOB storage and metadata in the content database must not exceed this limit.”

Now let’s address the first part of the statement “we will [1] move subsites around to different site collections in different databases”. This also is a myth because although this is doable, it doesn’t make it a good idea.. Do you remember that old Chris Rock line.. “You can drive your car with your feet if you want to, but that don’t make it a good, [expletive] idea?” Yes? Well that’s the same here. So why isn’t it a good idea? In this case it’s because subsites are contained within a site collection. There is a close relationship between a site collection and its subsites. Objects such as site columns and content types are associated with and shared by subsites. If you want to move an individual subsite, then you have to consider how you are going to move these shared objects as well – and this is where it gets tricky. This is because there are a number of objects are difficult to move – for example workflow history and approval field values. Even if you investigate using third party tools to perform the move your subsites, you will likely encounter issues.

Ok I get it, but what do you recommend… what is the fix?

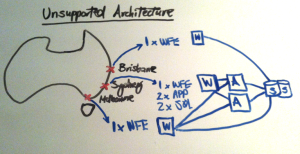

Essentially what needs to happen is that the architecture of the SharePoint environment should be considered carefully up front as much as possible, in conjunction with:

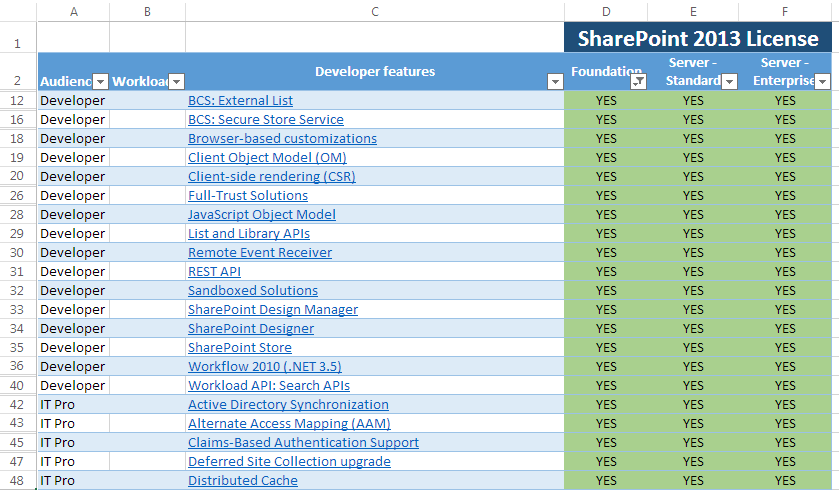

- Software Boundaries and Limits for SharePoint – http://technet.microsoft.com/en-us/library/cc262787.aspx

- Plan Logical Architectures for SharePoint – http://technet.microsoft.com/en-us/library/ff829836.aspx

- Information Management and Governance in SharePoint – http://technet.microsoft.com/en-us/library/cc262900.aspx

- Experience – It can be tricky to get your SharePoint architecture correct so hopefully you will be able to work with / be guided by a colleague or business partner that is experienced in SharePoint architecture

In case you are thinking, well, surely Microsoft should just raise their support limits even higher, or that subsites should be able to be moved around in a more full fidelity manner. I understand this point and was guilty thinking this myself when I first started working with SharePoint. As time grew on though, I also asked myself.. Is there any other product that offers all of the functionality that SharePoint does and has comparable supportability limits? Well frankly I couldn’t think of any. Besides, given that there is full transparency with the supportability limits and a wealth of information on TechNet to make it clear (at least to IT pros) what to do and what not to, I’m happy with this, at least for now.